MRAN is getting shutdown - what else is there for reproducibility with R, or why reproducibility is on a continuum?

R

You expect me to read this long ass blog post?

If you’re too busy to read this blog post, know that I respect your time. The table below summarizes this blog post:

| Need | Solution |

|---|---|

| I want to start a project and make it reproducible. | {renv} and Docker |

| There’s an old script laying around that I want to run. | {groundhog} and Docker |

| I want to work inside an environment that | Docker and the Posit |

| enables me to run code in a reproducible way. | CRAN mirror. |

But this table doesn’t show the whole picture, especially the issues with relying so much on Docker. So if you’re interesting in making science and your work reproducible and robust, my advice is that you stop doomscrolling on social media and keep on reading. If at the end of the blog post you think that this was a waste of time, just sent an insult my way, that’s ok.

I learnt last week that MRAN is going to get shutdown this year. For those of you that don’t know,

MRAN was a CRAN mirror managed by Microsoft. What made MRAN stand out was the fact that Microsoft

took daily snapshots of CRAN and it was thus possible to quite easily install old packages using

the {checkpoint} package. This was a good thing for reproducibility, and for Windows and macOS,

it was even possible to install binary packages, so no need to compile them from source.

Last year I had the opportunity to teach a course on building reproducible analytical pipelines at the University of Luxembourg, and made my teaching material available as an ebook that you can find here. I also wrote some blog posts about reproducibility and it looks like I will be continuing this trend for the forseeable future.

So in this blog post I’m going to show what you, as an R user, can do to make your code reproducible now that MRAN is getting shutdown. MRAN is not the only option for reproducibility, and I’m going to present in this blog post everything I know about other options. So if you happen to know of some solution that I don’t discuss here, please leave a comment here. But this blog post is not just a tutorial. I will also discuss what I think is a major risk that is coming and what, maybe, we can do to avoid it.

Reproducibility is on a continuum

Reproducibility is on a continuum, and depending on the nature of your project different tools are needed. First, let’s understand what I mean by “reproducibility is on a continuum”. Let’s suppose that you have written a script that produces some output. Here is the list of everything that can influence the output (other than the mathematical algorithm running under the hood):

- Version of the programming language used;

- Versions of the packages/libraries of said programming language used;

- Operating System, and its version;

- Versions of the underlying system libraries (which often go hand in hand with OS version, but not necessarily).

- Hardware that you run all that software stack on.

So by “reproducibility is on a continuum”, what I mean is that you could set up your project in a way that none, one, two, three, four or all of the preceding items are taken into consideration when making your project reproducible.

There is, however, something else to consider. Before, I wrote “let’s suppose you have written a script”, which means that you actually have a script laying around that was written in a particular programming language, and which makes use of a known set of packages/libraries. So for example, if my script uses the following R packages:

- dplyr

- tidyr

- ggplot2

I, obviously, know this list and if I want to make my script reproducible, I should take note of the versions of these 3 packages (and potentially of their own dependencies). However, what if you don’t know this set of packages that are used? This happens when you want to set up an environment that is frozen, and then distribute this environment. Developers will then all work on the same base environment, but you cannot possibly list all the packages that are going to be used because you have no idea what the developers will end up using (and remember that future you is included in these developers, and you should always try to be nice to future you).

So these means that we have two scenarios:

- Scenario 1: I have a script (or several), and want to make sure that it will always produce the same output;

- Scenario 2: I don’t know what I (or my colleagues) will develop, but we want to use the same environment across the organization to develop and deploy data products.

Turns out that the solutions to these two scenarios are different, but available in R, even though we won’t soon be able to count on MRAN anymore. HOWEVER! R won’t suffice for this job.

Scenario 1: making a script reproducible

Ok, so let’s suppose that I want to make a script reproducible, or at least increase the probability

that others (including future me) will be able to run this script and get the exact same output as

I originally did. If you want to minimize the amount of time spent doing it, the minimum thing you

should do is use {renv}. {renv} allows you

to create a file called renv.lock. You can then distribute this file to others alongside the code

of your project, or the paper, data, whatever. Nothing else is required from you; if people have

this file, they should be able to set up an environment that would closely mimic the one that was

used to get the results originally (but ideally, you’d invest a bit more time to make your script

run anywhere, for example by avoiding setting absolute paths that only exist on your machine).

Let’s take a look at such an renv.lock file:

Click me to see lock file

{

"R": {

"Version": "4.2.1",

"Repositories": [

{

"Name": "CRAN",

"URL": "http://cran.rstudio.com"

}

]

},

"Packages": {

"MASS": {

"Package": "MASS",

"Version": "7.3-58.1",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "762e1804143a332333c054759f89a706",

"Requirements": []

},

"Matrix": {

"Package": "Matrix",

"Version": "1.5-1",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "539dc0c0c05636812f1080f473d2c177",

"Requirements": [

"lattice"

]

},

"R6": {

"Package": "R6",

"Version": "2.5.1",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "470851b6d5d0ac559e9d01bb352b4021",

"Requirements": []

},

"RColorBrewer": {

"Package": "RColorBrewer",

"Version": "1.1-3",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "45f0398006e83a5b10b72a90663d8d8c",

"Requirements": []

},

"cli": {

"Package": "cli",

"Version": "3.4.1",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "0d297d01734d2bcea40197bd4971a764",

"Requirements": []

},

"colorspace": {

"Package": "colorspace",

"Version": "2.0-3",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "bb4341986bc8b914f0f0acf2e4a3f2f7",

"Requirements": []

},

"digest": {

"Package": "digest",

"Version": "0.6.29",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "cf6b206a045a684728c3267ef7596190",

"Requirements": []

},

"fansi": {

"Package": "fansi",

"Version": "1.0.3",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "83a8afdbe71839506baa9f90eebad7ec",

"Requirements": []

},

"farver": {

"Package": "farver",

"Version": "2.1.1",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "8106d78941f34855c440ddb946b8f7a5",

"Requirements": []

},

"ggplot2": {

"Package": "ggplot2",

"Version": "3.3.6",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "0fb26d0674c82705c6b701d1a61e02ea",

"Requirements": [

"MASS",

"digest",

"glue",

"gtable",

"isoband",

"mgcv",

"rlang",

"scales",

"tibble",

"withr"

]

},

"glue": {

"Package": "glue",

"Version": "1.6.2",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "4f2596dfb05dac67b9dc558e5c6fba2e",

"Requirements": []

},

"gtable": {

"Package": "gtable",

"Version": "0.3.1",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "36b4265fb818f6a342bed217549cd896",

"Requirements": []

},

"isoband": {

"Package": "isoband",

"Version": "0.2.5",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "7ab57a6de7f48a8dc84910d1eca42883",

"Requirements": []

},

"labeling": {

"Package": "labeling",

"Version": "0.4.2",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "3d5108641f47470611a32d0bdf357a72",

"Requirements": []

},

"lattice": {

"Package": "lattice",

"Version": "0.20-45",

"Source": "Repository",

"Repository": "CRAN",

"Hash": "b64cdbb2b340437c4ee047a1f4c4377b",

"Requirements": []

},

"lifecycle": {

"Package": "lifecycle",

"Version": "1.0.3",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "001cecbeac1cff9301bdc3775ee46a86",

"Requirements": [

"cli",

"glue",

"rlang"

]

},

"magrittr": {

"Package": "magrittr",

"Version": "2.0.3",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "7ce2733a9826b3aeb1775d56fd305472",

"Requirements": []

},

"mgcv": {

"Package": "mgcv",

"Version": "1.8-40",

"Source": "Repository",

"Repository": "CRAN",

"Hash": "c6b2fdb18cf68ab613bd564363e1ba0d",

"Requirements": [

"Matrix",

"nlme"

]

},

"munsell": {

"Package": "munsell",

"Version": "0.5.0",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "6dfe8bf774944bd5595785e3229d8771",

"Requirements": [

"colorspace"

]

},

"nlme": {

"Package": "nlme",

"Version": "3.1-159",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "4a0b3a68f846cb999ff0e8e519524fbb",

"Requirements": [

"lattice"

]

},

"pillar": {

"Package": "pillar",

"Version": "1.8.1",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "f2316df30902c81729ae9de95ad5a608",

"Requirements": [

"cli",

"fansi",

"glue",

"lifecycle",

"rlang",

"utf8",

"vctrs"

]

},

"pkgconfig": {

"Package": "pkgconfig",

"Version": "2.0.3",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "01f28d4278f15c76cddbea05899c5d6f",

"Requirements": []

},

"purrr": {

"Package": "purrr",

"Version": "0.3.5",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "54842a2443c76267152eface28d9e90a",

"Requirements": [

"magrittr",

"rlang"

]

},

"renv": {

"Package": "renv",

"Version": "0.16.0",

"Source": "Repository",

"Repository": "CRAN",

"Hash": "c9e8442ab69bc21c9697ecf856c1e6c7",

"Requirements": []

},

"rlang": {

"Package": "rlang",

"Version": "1.0.6",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "4ed1f8336c8d52c3e750adcdc57228a7",

"Requirements": []

},

"scales": {

"Package": "scales",

"Version": "1.2.1",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "906cb23d2f1c5680b8ce439b44c6fa63",

"Requirements": [

"R6",

"RColorBrewer",

"farver",

"labeling",

"lifecycle",

"munsell",

"rlang",

"viridisLite"

]

},

"tibble": {

"Package": "tibble",

"Version": "3.1.8",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "56b6934ef0f8c68225949a8672fe1a8f",

"Requirements": [

"fansi",

"lifecycle",

"magrittr",

"pillar",

"pkgconfig",

"rlang",

"vctrs"

]

},

"utf8": {

"Package": "utf8",

"Version": "1.2.2",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "c9c462b759a5cc844ae25b5942654d13",

"Requirements": []

},

"vctrs": {

"Package": "vctrs",

"Version": "0.5.1",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "970324f6572b4fd81db507b5d4062cb0",

"Requirements": [

"cli",

"glue",

"lifecycle",

"rlang"

]

},

"viridisLite": {

"Package": "viridisLite",

"Version": "0.4.1",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "62f4b5da3e08d8e5bcba6cac15603f70",

"Requirements": []

},

"withr": {

"Package": "withr",

"Version": "2.5.0",

"Source": "Repository",

"Repository": "RSPM",

"Hash": "c0e49a9760983e81e55cdd9be92e7182",

"Requirements": []

}

}

}

as you can see this file lists the R version that was used as well as the different libraries for a

project. Versions of the libraries are also listed, where they came from (CRAN or Github for

example) and these libraries’ requirements as well. So someone who wants to run the original script

in a similar environment has all the info needed to do it. {renv} also provides a simple way to

install all of this. Simply put the renv.lock file in the same folder as the original script and

run renv::restore(), and the right packages with the right versions get automagically installed

(and without interfering with your system-wide, already installed library of packages). The “only”

difficulty that you might have is installing the right version of R. If this is a recent

enough version of R, this shouldn’t be a problem, but installing old software might be difficult.

For example installing R version 2.5 might, or might not, be possible depending on your operating system

(I don’t like microsoft windows, but generally speaking it is quite easy to install very old

software on it, which in the case of Linux distros can be quite difficult. So I guess on windows

this could work more easily). Then there’s also the system libraries that your script might need, and it might

also be difficult to install these older versions. So that’s why I said that providing the

renv.lock file is the bare minimum. But before seeing how we can deal with that, let’s discuss a

scenario 1bis, which is the case where you want to run an old script (say, from 5 years ago), but

there’s no renv.lock file to be found.

Scenario 1bis: old script, no renv.lock file

For these cases I would have used {checkpoint}, but as explained in the intro MRAN is getting

shutdown, and with it out of the picture {checkpoint} will cease to work.

The way {checkpoint} worked is that you would simply add the following line at the very top of

the script in question:

checkpoint::checkpoint("2018-12-12")and this would download the packages needed for the script from that specific date and run your

script. Really, really useful. But unfortunately, Microsoft decided that MRAN should get the axe.

So what else is there? While researching for this blog post, I learned about {groundhog} which

supposedly provides the same functionality. Suppose I have a script from 5 years ago that loads the

following libraries:

library(purrr)

library(ggplot2)By rewriting this like so (and installing {groundhog} beforehand):

groundhog.library("

library(purrr)

library(ggplot2)",

"2017-10-04",

tolerate.R.version = "4.2.2"){purrr} and {ggplot2} get downloaded as they were on the date I provided. If you want to know

what I had to use the “tolerate.R.version” option, this is because if you try to run it without it,

you get the following very useful message:

---------------------------------------------------------------------------

|IMPORTANT.

| Groundhog says: you are using R-4.2.2, but the version of R current

| for the entered date, '2017-10-04', is R-3.4.x. It is recommended

| that you either keep this date and switch to that version of R, or

| you keep the version of R you are using but switch the date to

| between '2022-04-22' and '2023-01-08'.

|

| You may bypass this R-version check by adding:

| `tolerate.R.version='4.2.2'`as an option in your groundhog.library()

| call. Please type 'OK' to confirm you have read this message.

| >okThat’s is pretty neat, as it tells you “hey, getting the right packages is good, but if your R version is not the same, you’re not guaranteed to get the same results back, and this might not even work at all”.

So, here’s what happens when I try to install these packages (on my windows laptop, as most people

would do), without installing the right version of R as suggested by {groundhog}:

Click me to see lock file

+ Will now attempt installing 5 packages from source.

groundhog says: Installing 'magrittr_1.5', package #1 (from source) out of 5 needed

> As of 16:12, the best guess is that all 5 packages will install around 16:14

trying URL 'https://packagemanager.rstudio.com/all/latest/src/contrib/Archive/magrittr/magrittr_1.5.tar.gz'

Content type 'application/x-tar' length 200957 bytes (196 KB)

downloaded 196 KB

groundhog says: Installing 'rlang_0.1.2', package #2 (from source) out of 5 needed

> As of 16:12, the best guess is that all 5 packages will install around 16:14

trying URL 'https://packagemanager.rstudio.com/all/latest/src/contrib/Archive/rlang/rlang_0.1.2.tar.gz'

Content type 'application/x-tar' length 200867 bytes (196 KB)

downloaded 196 KB

groundhog says: Installing 'Rcpp_0.12.13', package #3 (from source) out of 5 needed

> As of 16:13, the best guess is that all 5 packages will install around 16:14

trying URL 'https://packagemanager.rstudio.com/all/latest/src/contrib/Archive/Rcpp/Rcpp_0.12.13.tar.gz'

Content type 'application/x-tar' length 3752364 bytes (3.6 MB)

downloaded 3.6 MB

Will try again, now showing all installation output.

trying URL 'https://packagemanager.rstudio.com/all/latest/src/contrib/Archive/Rcpp/Rcpp_0.12.13.tar.gz'

Content type 'application/x-tar' length 3752364 bytes (3.6 MB)

downloaded 3.6 MB

* installing *source* package 'Rcpp' ...

** package 'Rcpp' successfully unpacked and MD5 sums checked

staged installation is only possible with locking

** using non-staged installation

** libs

g++ -std=gnu++11 -I"c:/Users/Bruno/AppData/Roaming/R-42~1.2/include" -DNDEBUG -I../inst/include/ -I"c:/rtools42/x86_64-w64-mingw32.static.posix/include" -O2 -Wall -mfpmath=sse -msse2 -mstackrealign -c Date.cpp -o Date.o

In file included from ../inst/include/RcppCommon.h:67,

from ../inst/include/Rcpp.h:27,

from Date.cpp:31:

../inst/include/Rcpp/sprintf.h: In function 'std::string Rcpp::sprintf(const char*, ...)':

../inst/include/Rcpp/sprintf.h:30:12: warning: unnecessary parentheses in declaration of 'ap' [-Wparentheses]

30 | va_list(ap);

| ^~~~

../inst/include/Rcpp/sprintf.h:30:12: note: remove parentheses

30 | va_list(ap);

| ^~~~

| - -

In file included from ../inst/include/Rcpp.h:77,

from Date.cpp:31:

../inst/include/Rcpp/Rmath.h: In function 'double R::pythag(double, double)':

../inst/include/Rcpp/Rmath.h:222:60: error: '::Rf_pythag' has not been declared; did you mean 'pythag'?

222 | inline double pythag(double a, double b) { return ::Rf_pythag(a, b); }

| ^~~~~~~~~

| pythag

make: *** [c:/Users/Bruno/AppData/Roaming/R-42~1.2/etc/x64/Makeconf:260: Date.o] Error 1

ERROR: compilation failed for package 'Rcpp'

* removing 'C:/Users/Bruno/Documents/R_groundhog/groundhog_library/R-4.2/Rcpp_0.12.13/Rcpp'

The package 'Rcpp_0.12.13' failed to install!

groundhog says:

The package may have failed to install because you are using R-4.2.2

which is at least one major update after the date you entered '2017-10-04'.

You can try using a more recent date in your groundhog.library() command,

or run it with the same date using 'R-3.4.4'

Instructions for running older versions of R:

http://groundhogr.com/many

---------------- The package purrr_0.2.3 did NOT install. Read above for details -----------------

Warning message:

In utils::install.packages(url, repos = NULL, lib = snowball$installation.path[k], :

installation of package 'C:/Users/Bruno/AppData/Local/Temp/RtmpyKYXFd/downloaded_packages/Rcpp_0.12.13.tar.gz' had non-zero exit status

> As you can see it failed, very likely because I don’t have the right development libraries

installed on my windows laptop, due to the version mismatch that {groundhog} complained about. I

also tried on my Linux workstation, and got the same outcome. In any case, I want to stress that

this is not {groundhog}’s fault, but this due to the fact that I was here only concerned with

packages; as I said multiple times now, reproducibility is on an continuum, and you also need to

deal with OS and system libraries. So for now, we only got part of the solution.

By the way, you should know 3 more things about {groundhog}:

- the earliest available date is, in theory, any date. However, according to its author,

{groundhog}should work reliably with a date as early as “2015-04-16”. That’s because the oldest R version{groundhog}is compatible with is R 3.2. However, again according to its author, it should be possible to patch{groundhog}to work with any earlier versions of R. {groundhog}’s developer is planning to save the binary packages off MRAN so that{groundhog}will continue offering binary packages once MRAN is out of the picture, which will make installing these packages more reliable.- On Windows and macOS,

{groundhog}installs binary packages if they’re available (which basically is always the case, in the example above it was not the case because I was using Posit’s CRAN mirror, and I don’t think they have binary packages for Windows that are that old. But if using another mirror, that should not be a problem). So if you install the right version of R, you’re almost guaranteed that it’s going to work. But, there is always a but, this also depends on hardware now. I’ll explain in the last part of this blog post, so read on.

Docker to the rescue

The full solution in both scenarios involves Docker. If you are totally unfamiliar with Docker, you can imagine that Docker makes it easy to set up a Linux virtual machine and run it. In Docker, you use Dockerfiles (which are configuration files) to define Docker images and you can then run containers (your VMs, if you wish) based on that image. Inside that Dockerfile you can declare which operating system you want to use and what you want to run inside of it. For example, here’s a very simple Dockerfile that prints “Hello from Docker” on the Ubuntu operating system (a popular Linux distribution):

FROM ubuntu:latest

RUN echo "Hello, World!"You then need to build the image as defined from this Dockerfile. (Don’t try to follow along for now with your own computer; I’ll link to resources below so that you can get started if you’re interested. What matters is that you understand why Docker is needed).

Building the image can be achieved by running this command where the Dockerfile is located:

docker build -t hello .and then run a container from this image:

docker run --rm -d --name hello_container helloSending build context to Docker daemon 2.048kB

Step 1/2 : FROM ubuntu:latest

---> 6b7dfa7e8fdb

Step 2/2 : RUN echo "Hello, World!"

---> Running in 5dfbff5463cf

Hello, World!

Removing intermediate container 5dfbff5463cf

---> c14004cd1801

Successfully built c14004cd1801

Successfully tagged hello:latestYou should see “Hello, World!” inside your terminal. Ok so this is the very basics. Now why is that useful? It turns

out that there’s the so-called Rocker project, and this project provides a collection of Dockerfiles for current, but also older

versions of R. So if we go back to our renv.lock file from before, we can see which R version was used (it was R 4.2.1) and

define a new Dockerfile that builds upon the

Dockerfile from the Rocker project for R version 4.2.1.

Let’s first start by writing a very simple script. Suppose that this is the script that we want to make reproducible:

library(purrr)

library(ggplot2)

data(mtcars)

myplot <- ggplot(mtcars) +

geom_line(aes(y = hp, x = mpg))

ggsave("/home/project/output/myplot.pdf", myplot)First, let’s assume that I did my homework and that the renv.lock file from before is actually

the one that was generated at the time this script was written. In that case, you could write a

Dockerfile with the correct version of R and use the renv.lock file to install the right

packages. This Dockerfile would look like this:

FROM rocker/r-ver:4.2.1

RUN mkdir /home/project

RUN mkdir /home/project/output

COPY renv.lock /home/project/renv.lock

COPY script.R /home/project/script.R

RUN R -e "install.packages('renv')"

RUN R -e "setwd('/home/project/');renv::restore(confirm = FALSE)"

CMD R -e "source('/home/project/script.R')"We need to put the renv.lock file, as well as the script script.R in the same folder as the Dockerfile,

and then build and run the image:

# Build it with

docker build -t project .run the container (and mount a volume to get the image back – don’t worry if you don’t know what volumes are, I’ll link resources at the end):

docker run --rm -d --name project_container -v /path/to/your/project/output:/home/project/output:rw projectEven if you’ve never seen a Dockerfile in your life, you likely understand what is going on here:

the first line pulls a Docker image that contains R version 4.2.1 pre-installed on Ubuntu. Then, we

create a directory to hold our files, we copy said files in the directory, and then run several R

commands to install the packages as defined in the renv.lock file and run our script in an

environment that not only has the right versions of the packages but also the right version of R.

This script then saves the plot in a folder called output/, which we link to a folder also called

output/ but on our machine, so that we can then look at the generated plot (this is what -v /path/to/your/project/output:/home/project/output:rw does). Just as this script saves a plot, it

could be doing any arbitrarily complex thing, like compiling an Rmarkdown file, running a model,

etc, etc.

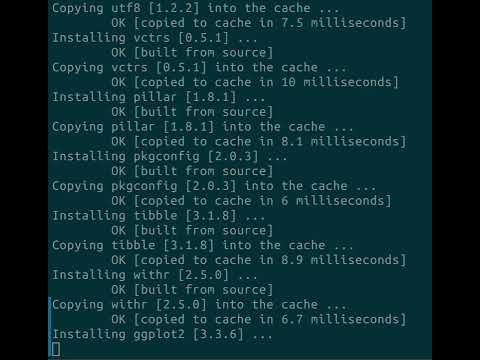

Here’s a short video of this process in action:

Now, let’s do the same thing but for our scenario 1bis that relied on {groundhog}. Before writing the

Dockerfile down, here’s how you should change the script. Add these lines at the very top:

groundhog::set.groundhog.folder("/home/groundhog_folder")

groundhog::groundhog.library("

library(purrr)

library(ggplot2)",

"2017-10-04"

)

data(mtcars)

myplot <- ggplot(mtcars) +

geom_line(aes(y = hp, x = mpg))

ggsave("/home/project/output/myplot.pdf", myplot)I also created a new script that installs the dependencies of my script when building my Dockerfile. This way, when I run the container, nothing gets installed anymore. Here’s what this script looks like:

groundhog::set.groundhog.folder("/home/groundhog_folder")

groundhog::groundhog.library("

library(purrr)

library(ggplot2)",

"2017-10-04"

)It’s exactly the beginning from the main script. Now here comes the Dockerfile, and this time it’s going to be a bit more complicated:

FROM rocker/r-ver:3.4.4

RUN echo "options(repos = c(CRAN='https://packagemanager.rstudio.com/cran/latest'), download.file.method = 'libcurl')" >> /usr/local/lib/R/etc/Rprofile.site

RUN mkdir /home/project

RUN mkdir /home/groundhog_folder

RUN mkdir /home/project/output

COPY script.R /home/project/script.R

COPY install_deps.R /home/project/install_deps.R

RUN R -e "install.packages('groundhog');source('/home/project/install_deps.R')"

CMD R -e "source('/home/project/script.R')"As you can see from the first line, this time we’re pulling an image that comes with R 3.4.4. This

is because that version of R was the current version as of 2017-10-04, the date we assumed this

script was written on. Because this is now quite old, we need to add some more stuff to the

Dockerfile to make it work. First, I change the repositories to the current mirror from

Posit. This is because the

repositories from this image are set to MRAN at a fixed date. This was done at the time for

reproducibility, but now MRAN is getting shutdown, so we need to change the repositories or else

our container will not be able to download packages. Also, {groundhog} will take care of

installing the right package versions. Then I create the necessary folders and run the

install_deps.R script which is the one that will install the packages. This way, the packages get

installed when building the Docker image, and not when running the container, which is preferable.

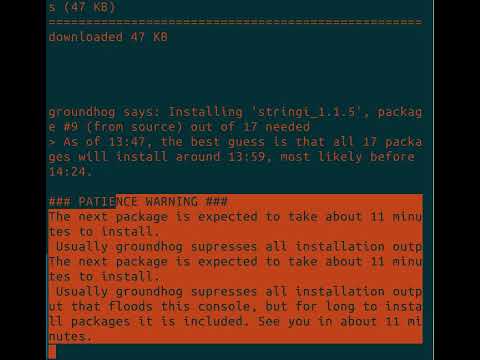

Finally, the main script gets run, and an output gets produced. Here’s a video showing this

process:

Now all of this may seem complicated, and to be honest it is. Reproducibility is no easy task, but I hope that

I’ve convinced you that by combining {renv} and Docker, or {groundhog} and Docker it is possible to rerun

any analysis. But you do have to be familiar with these tools, and there’s also another issue by using Docker.

Docker works on Windows, macOS and Linux, but the container that runs must be a Linux distribution, usually Ubuntu.

But what if the original analysis was done on Windows and macOS? This can be a problem if the script relies on some

Windows or macOS specific things, which even for a language available on all platforms like R can happen. For example,

I’ve recently noticed that the tar() function in R, which is used to decompress tar.gz files, behaves differently

on Windows than on Linux. So ideally, even if you’re running your analysis on Windows, you should then try

to distribute a working Dockerfile alongside your paper (if you’re a researcher, or if you’re working in the private

sector, you should do the same for each project). Of course, that is quite demanding, and you would need to

learn about these tools, or hire someone to do that for you… But a natural question is then, well, “why

use Docker at all? Since it’s easy to install older versions of R on Windows and macOS, wouldn’t an renv.lock

file suffice? Or even just {groundhog} which is arguably even easier to use?”

Well, more on this later. I still need to discuss scenario 2 first.

Scenario 2: offering an environment that is made for reproducibility

Ok, so this one is easier. In this scenario, you have no idea what people are going to use, so you

cannot generate an renv.lock file beforehand, and {groundhog} is of no help either, because,

well, there’s no scripts yet to actually make reproducible. This is the situation I’ve had for this

project that I’ve discussed at the end of last year, on code

longevity of the R programming language. The

solution is to write a Dockerfile that people can modify and run; this in turn produces some

results that can then be shared. This Dockerfile pulls from another Dockerfile, and that other

Dockerfile is made for reproducibility. How? Because that other Dockerfile is based on Ubuntu

22.04, compiles R 4.2.2 from source, and sets the repositories to

https://packagemanager.rstudio.com/cran/__linux__/jammy/2022-11-21 . This way, the packages get

downloaded exactly as they were on November 21st 2022. So every time this image defined from this

Dockerfile gets built, we get exactly the same environment.

It should also be noted that this solution can be used in the case of scenario 1bis. Let’s say I have a script from August 2018; by using a Docker image that ships the current version of R at that time (which should be R version 3.5.x) and Ubuntu (which at the time was 18.04, codenamed Bionic Beaver) and then using the Posit package manager at a frozen date, for example https://packagemanager.rstudio.com/cran/__linux__/bionic/2018-08-16 I should be able to reproduce an environment that is close enough. However the problem is that Posit’s package manager earliest available date is Octobre 2017, so anything before that would not be possible to reproduce.

Ok, great, here are the solutions for reproducibility. But there are still problems.

A single point of failure: Docker

Let’s be blunt: having Docker as the common denominator in all these solutions is a problem. This is because Docker represents a single point of failure. But the problem is not Docker itself, but the infrastructure.

Let me explain: Docker is based on many different open source parts, and that’s great. There’s also Podman, which is basically a drop-in replacement (when combined with other tools) made by Red Hat, which is completely open source as well. So the risk does not come from there, because even if for some reason Docker would disappear, or get abandoned or whatever, we could still work with Podman, and it would likely be possible to create a fork from Docker.

But the issue is the infrastructure. For now, using Docker and more importantly hosting images is free for personal use, education, open source communities and small businesses. So this means that a project like Rocker likely pays nothing for hosting all the images they produce (but who knows, I may be wrong on this). And Rocker makes a lot of images. See, at the top of the Dockerfiles I’ve used in this blog post, there’s always a statement like:

FROM rocker/r-ver:4.2.1as explained before, this states that a pre-built image that ships R version 4.2.1 on Ubuntu gets downloaded. But from where? This image gets downloaded from Docker Hub, see here.

This means that you can download this pre-built image and don’t need to build it each time you need

to work with it. You can simply use that as a base for your work, like the image built for reproducibility

described in scenario 2. But what happens if at some point in the future Docker changes its licensing

model? What if they still have a free tier, but massively limit the amount of images that get

hosted for free? What if they get rid of the free tier entirely? This is a massive risk that needs to be

managed in my opinion. There is the option of the Rocker project hosting the images themselves.

It is possible to create your own, self-hosted Docker registry and not use Docker

Hub, after all. But this is costly not only in terms of storage, but also of manpower to maintain all this.

But maybe worse than that is: what if at some point in the future you cannot rebuild these

images, at all? You would need to make sure that these pre-built images do not get lost. And this is

already happening because of MRAN getting shutdown. In this blog post I’ve used the

rocker/r-ver:3.4.4 image to run code from 2017. The problem is that if you look at its

Dockerfile, you see that building this image requires

MRAN.

So in other words, once MRAN is offline, it won’t be possible to rebuild this image, and the Rocker

project will need to make sure that the pre-built image that is currently available on Docker Hub

stays available forever. Because if not, it would be quite hard to rerun code from, say, 2017. Same

goes for Posit’s package manager. Posit’s package manager could be used as a drop-in replacement

for MRAN, but for how long? Even though Posit is a very responsible company, I believe that it is

dangerous that such a crucial service is managed by only one company.

And rebuilding old images will be necessary. This is now the part where I answer the question from above:

“why use Docker at all? Since it’s easy to install older versions of R on Windows and macOS, wouldn’t an renv.lock

file suffice? Or even just {groundhog} which is arguably even easier to use?”

The problem is hardware. You see, Apple has changed hardware architecture recently, their new computers

switched from Intel based hardware to their own proprietary architecture (Apple Silicon) based on the ARM specification.

And what does that mean concretely? It means that all the binary packages that were built for Intel based

Apple computers cannot work on their new computers. Which means that if you have a recent M1 Macbook and

need to install old CRAN packages (for example, by using {groundhog}), these need to be compiled to work

on M1. You cannot even install older versions of R, unless you also compile those from source! Now I have

read about a compatibility layer called Rosetta which enables to run binaries compiled for the Intel architecture

on the ARM architecture, and maybe this works well with R and CRAN binaries compiled

for Intel architecture. Maybe, I don’t know. But my point is that you never know what might come in the future,

and thus needing to be able to compile from source is important, because compiling from source is what

requires the least amount of dependencies that are outside of your control. Relying on binaries is not future-proof.

And for you Windows users, don’t think that the preceding paragraph does not concern you. I think that it is very likely that Microsoft will push in the future for OEM manufacturers to develop more ARM based computers. There is already an ARM version of Windows after all, and it has been around for quite some time, and I think that Microsoft will not kill that version any time in the future. This is because ARM is much more energy efficient than other architectures, and any manufacturer can build its own ARM cpus by purchasing a license, which can be quite interesting. For example in the case of Apple silicon cpus, Apple can now get exactly the cpus they want for their machines and make their software work seamlessly with it. I doubt that others will pass the chance to do the same.

Also, something else that might happen is that we might move towards more and more cloud based computing, but I think that this scenario is less likely than the one from before. But who knows. And in that case it is quite likely that the actual code will be running on Linux servers that will likely be ARM based because of energy costs. Here again, if you want to run your historical code, you’ll have to compile old packages and R versions from source.

Basically, binary packages are in my opinion not a future-proof option, so that’s why something like Docker will stay, and become ever more relevant. But as I argued before, that’s a single point of failure.

But there might be a way we can solve this and not have to rely on Docker at all.

Guix: toward practical transparent verifiable and long-term reproducible research

The title of this section is the same as the title from a research paper published in 2022 that you can read here.

This paper presents and shows how to use Guix, which is a tool to build, from scratch and in a

reproducible manner, the computational environment that was used to run some code for research. Very

importantly, Guix doesn’t rely on containers, virtual machines or anything like that. From my,

albeit still limited, understanding of how it works, Guix requires some recipes telling it how it

should build software. Guix integrates with CRAN, so it’s possible to tell Guix “hey, could you

build ggplot2” and Guix does as instructed. It builds {ggplot2} and all the required

dependencies. And what’s great about Guix is that it’s also possible to build older versions of

software.

The authors illustrate this by reproducing results from a 2019 paper, by recreating the environment used at the time, 3 years later.

This could be a great solution, because it would always allow the recreation of computational environments from source. So architecture changes would not be a problem, making Guix quite future proof. The issue I’ve found though, is that Guix only works on Linux. So if you’re working on Windows or macOS, you would need Docker to recreate a computational environment with Guix. So you could think that we’re back to square one, but actually no. Because you could always have a Linux machine or server that you would use for reproducibility, on which Linux is installed, thus eliminating the need for Docker, thus removing that risk entirely.

I’m currently exploring Guix and will report on it in greater detail in the future. In the meantime, I hope to have convinced you that, while reproducibility is no easy task, the tools that are currently available can help you set up reproducible project. However, for something to be and stay truly reproducible, some long term maintenance is also required.

Conclusion

So here we are, these are, as far as I know, the options to make your code reproducible. But it is no easy task and it takes time. Unfortunately, many scientists are not really concerned with making their code reproducible, simply because there is no real incentive for them to do it. And it’s a task that is becoming more and more complex as there are other risks that need to be managed, like the transition from Intel based architectures for cpus towards ARM. I’m pretty sure there are many scientists who have absolutely no idea what Intel based cpus or ARM cpus or Rosetta or whatever are. So telling them they need to make their code reproducible is one thing, telling them they need to make it so future proof that architecture changes won’t matter is like asking for the Moon.

So research software engineers will become more and more crucial, and should be integrated to research teams to deal with this question (and this also holds true for the private sector; there should be someone whose job is make code reproducible across the organization).

Anyways, if you read until here, I appreciate it. This was a long blog post. If you want to know more, you can read this other blog post of mine that explains how to use Docker, and also this other blog post that explains why Docker is not optional (but now that I’ve discovered Guix, maybe it’s Guix that is not optional).

By the way, if you want to grab the scripts and Dockerfiles from this blog post, you can get them here.

Hope you enjoyed! If you found this blog post useful, you might want to follow me on Mastodon or twitter for blog post updates and buy me an espresso or paypal.me, or buy my ebook on Leanpub. You can also watch my videos on youtube. So much content for you to consoom!